smart belt

The project used a plastic tube and an MPU6050 sensor to measure body angles during

sleep. We observed changes in respiratory rate at different angles. The apnea protection

system emits a loud sound and a message on the belt-mounted phone, which vibrates to

stimulate the respiratory system based on the apnea episodes

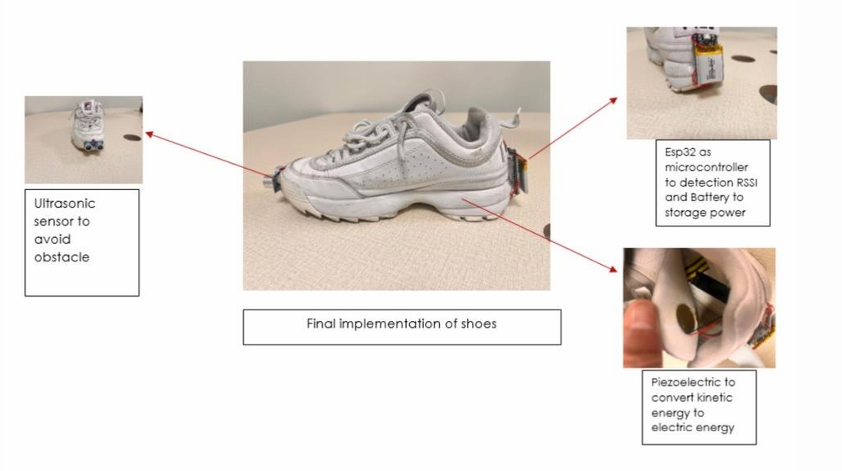

Smart Shoes

project aims to solve indoor mobility challenges for the visually impaired by designing smart shoes that combine Wi-Fi RSSI-based indoor navigation with real-time voice guidance and kinetic energy harvesting. The system ensures accurate location tracking without GPS, and it operates sustainably without the need for external charging.

EBISAD enhanced body imaging and surgical assistance device

EBISAD is an AI-powered surgical assistant designed to enhance the success rate of surgeries by providing real-time support to surgeons. The system simulates a high-tech surgical environment using interactive panels, voice-guided commands, vitals monitoring, and alert systems, all within an immersive display setup. EBISAD begins by scanning a patient’s ID to retrieve vital health data, continuously tracks parameters like heart rate, oxygen level, and blood pressure, and instantly alerts the surgeon in case of any complications. It then provides guided step-by-step instructions for the procedure, ensuring accuracy and reducing human error. The entire experience is presented through a custom-built visor box and a curved monitor, creating a focused, high-tech user interface. This project is designed for use in hospitals and surgical training centers, aiming to revolutionize the operating room by combining technology, precision, and safety for 100% surgical success.

Pulse Point

**Pulse-Point** is a smart AI-powered mobile application designed to promote good health and well-being. It helps users stay hydrated with timely water reminders, detects common skin conditions using machine learning, and provides instant health advice through an interactive chatbot. The app also includes a personalized health checkup quiz and helps users book doctor appointments based on their location. By combining multiple health services into one easy-to-use platform, Pulse-Point ensures early detection, guided self-care, and improved access to medical support.

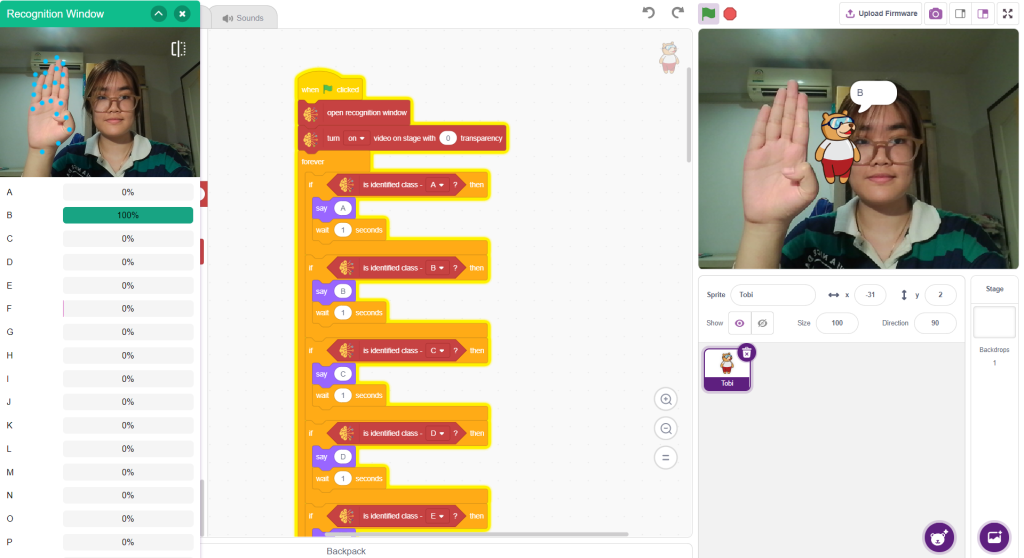

Sign Language for Hearing-Impaired Children (For Preschoolers)

This project focuses on developing an innovative approach to support sign language learning for preschool-aged deaf children. It leverages Computer vison and Object detection technologies to track hand positions, combined with machine learning to create a real-time hand gesture recognition system.

This technology enhances the effectiveness of sign language learning for young children through interactive and immersive experiences, enabling them to practice and develop their signing skills more efficiently. Furthermore, it serves as a valuable tool for improving access to education and knowledge for children with hearing impairments, contributing to a more inclusive learning environment.

EATelligence

EATelligence is a smart health app that creates personalized meals and lifestyle plans based on your body’s real-time needs. Using wearable data and AI-powered image scans of the tongue, skin, and eyes, it diagnoses imbalances and recommends what to eat, drink, and do each day—blending Ayurvedic wisdom with modern technology.

The idea was inspired by a personal experience: my grandfather’s sodium levels dropped dangerously during cancer treatment, and no one noticed because hospitals rely on generic treatment plans. EATelligence aims to fix that by offering tailored, real-time health insights.

At the core of the project is Ayurveda, a 5,000-year-old Indian system of medicine that believes food is medicine. While modern health apps only track basic metrics, Ayurveda focuses on understanding your Dosha (body type), Agni (digestive fire), and Prakriti (constitution). This app uses those principles to guide nutrition, mental health, and lifestyle.

EATelligence isn’t just a wellness tracker, it’s a holistic health companion. It connects ancient knowledge with cutting-edge tools to help people eat smarter, live better, and heal naturally.

Let’s use food as medicine, not medicine as food.

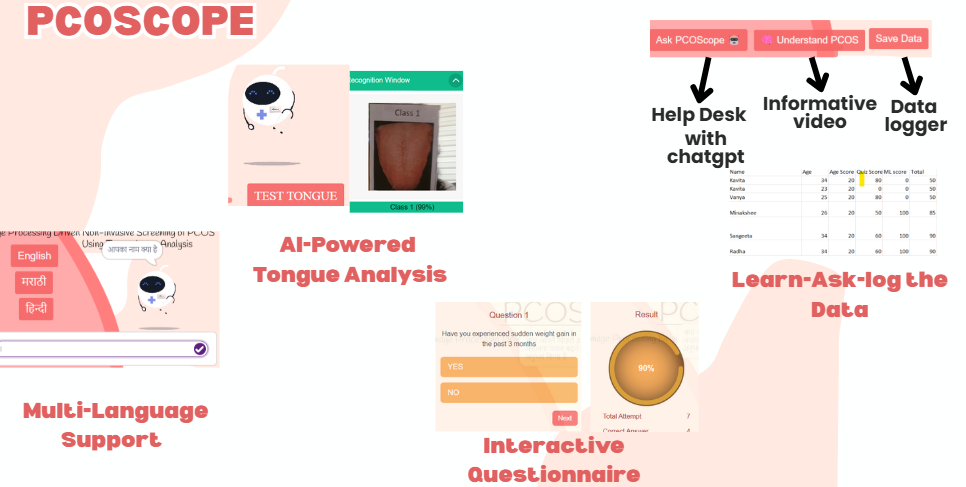

PCOScope Image Processing Driven Non-Invasive Screening of PCOS Using Tongue Image Analysis

PCOScope is a revolutionary, AI-powered, non-invasive screening tool designed to detect Polycystic Ovary Syndrome (PCOS) early and accurately. Combining modern technology with traditional health insights, PCOScope analyzes high-quality tongue images and user-reported symptoms to determine the likelihood of PCOS. The tool uses advanced machine learning algorithms trained on a large dataset to recognize subtle signs such as coating, color, and texture changes in the tongue — indicators of underlying hormonal imbalances.

In addition to tongue analysis, PCOScope includes a quick symptom quiz, data logging features, a multilingual chatbot help desk, and informative videos to raise awareness and guide users. Requiring only a smartphone, it is accessible, affordable, and ideal for low-resource settings. With over 94% accuracy in trials, PCOScope empowers women by enabling early detection, encouraging timely medical consultation, and supporting proactive health management. It’s more than a screening tool — it’s a step toward healthcare democratization.

THE TONGUE CONTROLLED WHEELCHAIR

A Tongue Controlled Wheelchair (TWC) is a cutting-edge mobility solution for individuals with severe physical disabilities. This innovative device uses sensors placed externally, around the user’s mouth, to detect and interpret tongue movements. These sensors recognize subtle changes in tongue position and translate them into commands that control the wheelchair’s movement, speed, and other functions. This technology enables users with limited limb or hand mobility to navigate their environment with greater ease and independence. The external placement of sensors ensures comfort and avoids potential issues associated with mouthpieces or implants. The TWC system often features customizable controls and integration with other assistive technologies, enhancing its versatility. By utilizing tongue movements for control, the TWC offers a user-friendly and effective way to improve mobility and quality of life for those with significant motor impairments, allowing them greater autonomy in their daily lives.

Portkey

Our project is designed to break down communication barriers between Indian Sign Language (ISL) users and those unfamiliar with it by using Augmented Reality (AR) technology in an inclusive and innovative way. Built entirely in Pictoblox, the system uses AR glasses worn by the hearing person, which captures the hand gestures in ISL. These gestures are recognized using MediaPipe and Teachable Machine, then converted into both text (displayed on the AR glasses) and speech through text-to-speech technology. The Pictoblox code is deployed to the Vuzix M400 smart glasses using an Android application, ensuring portability and ease of use.

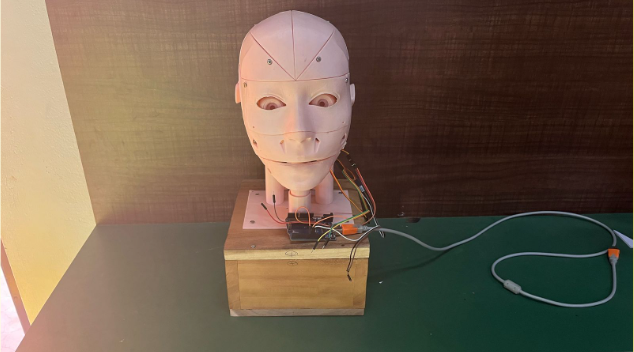

School Help Desk Robo

Visitors and students at schools often face difficulties navigating the campus, finding information, and getting answers to common questions, which can lead to confusion, wasted time, and increased workload for school staff.